Build your own bare-metal cloud with NVIDIA DPF Zero Trust

Unlocking new use cases for DPUs

The onboard compute capabilities of NVIDIA BlueField-3 adapters create all kinds of interesting opportunities in both supercomputing and bare metal cloud scenarios.

BlueField-3 SuperNIC adapters play a pivotal role in NVIDIA Spectrum-X architectures for accelerated RDMA over Converged Ethernet (ROCE), while BlueField-3 DPU adapters provide CPU-offload capabilities for north-south traffic in AI clusters. But with the DPU specifically, there are use cases for regular enterprises too.

First came the accelerated Kubernetes pod networking solution, known as DPF or the DOCA Platform Framework, released early in 2025.

DPF delivers up to 800Gbps line rate performance to all your Kubernetes pods, by offloading the entire OVN-based Kubernetes pod network onto the DPU and giving every pod on worker nodes its own hardware-accelerated NIC, through a VF passed to the pod via SR-IOV. You can read more about it in our blog here.

Meet DPF Zero Trust Mode

In early September 2025, NVIDIA released the first version of an entirely new DPF use case, called DPF Zero Trust Mode (DPF-ZT).

To differentiate the two use cases, the accelerated pod network solution is now called DPF Host-Trusted Mode, for its tight coupling of host OS+Kubernetes software to the software running on the DPU itself.

The new DPF Zero Trust Mode provides an entirely new and different solution: the ability to build your own bare-metal cloud.

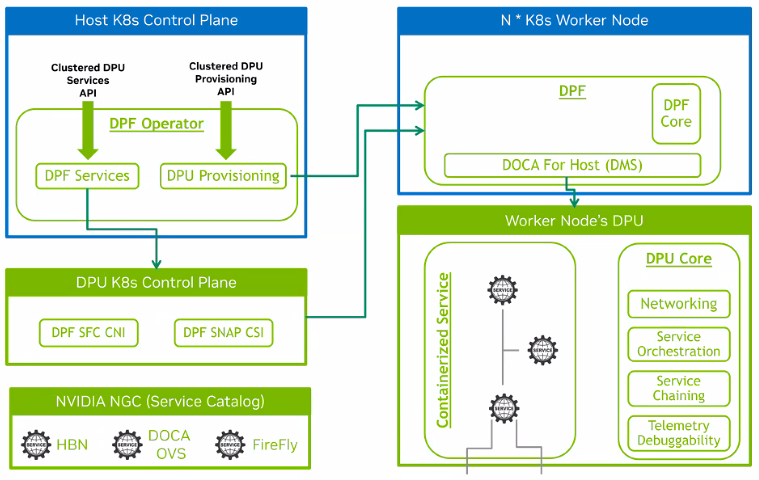

The two modes are so different from each other that we need to first take a step back in order to properly explain how the base DPF technology works, and then explore the difference between the two use cases. This is a diagram from our friends at NVIDIA explaining what DPF is:

The main goal of DPF is to provide a standardized framework for deploying applications, called DPU Services, to BlueField-3 DPU adapters.

These adapters run their own OS, and it would be tedious if you had to manage this separately from the host OS+Kubernetes stack.

Instead, DPF runs a virtual Kubernetes cluster somewhere, using Kamaji and its own Kubernetes API endpoint, called the DPF Control Plane.

The OS on each DPU has a kubelet service that joins the virtual DPF Control Plane. You can then deploy apps to the DPU by simply defining DPUService custom resources in the DPF Control Plane. The DPU always runs an OVN-based CNI that will control the two 400GbE ports on the DPU.

It’s all about the control plane

The difference between Host-Trusted Mode and Zero-Trust Mode is primarily where this DPF Control Plane runs. In DPF Host-Trusted Mode, the DPF Control Plane runs as a set of pods inside the Kubernetes cluster that is running on the host OS:

The Kubernetes worker nodes join the “real” Kubernetes cluster, while the kubelet on the DPU joins the virtual Kubernetes cluster that is the DPF Control Plane (depicted as “DPU K8s Control Plane” in the diagram above).

In this model, a DOCA DMS pod on each worker takes care of flashing the DPU with the desired firmware and configuring it for use, all through an rshim connection available through the DOCA software present in the pod. The OVN-Kubernetes CNIs in the Kubernetes cluster on the hosts are integrated with the OVN-Kubernetes CNI running inside the DPUs, which is what makes the accelerated pod networking possible.

You can also see why this is called DPF Host-Trusted Mode: there is an implicit trust between the software on the host OS and the software on the DPU. They work together to deliver higher performance.

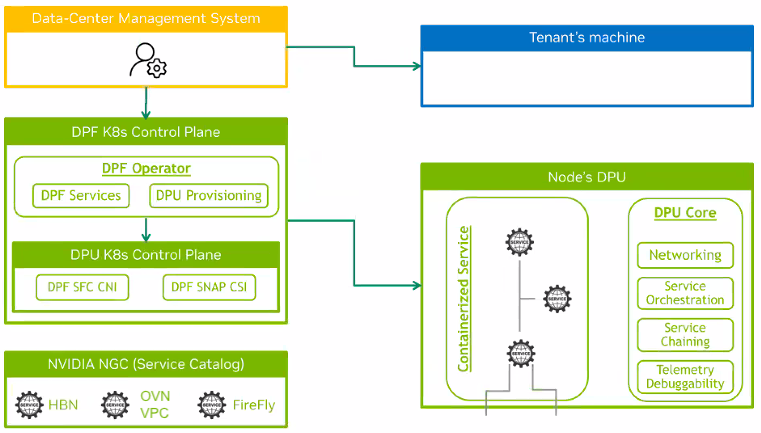

When we switch to DPF Zero Trust Mode, the picture becomes very different:

Now, the host OS (“Tenant’s machine” in the picture above) is implicitly not trusted at all. The DPU no longer cares what happens in the host OS, nor does it integrate with it in any way.

The DPU is now used to ensure that the host OS is only getting access to the network (and other optional resources) that it is allowed to.

If you’re thinking that sounds familiar, you’re right: it’s very similar to the AWS Nitro cards in bare metal server instances. Ever wondered why, even though you can run a bare-metal server instance in AWS, you still don’t get direct access to the physical network? It’s because one of those Nitro cards is handling the networking and it doesn’t trust anything running on the host. It is configured through a separate Out-Of-Band (OOB) network and it ensures your bare metal instance is connected only to your VPC and nothing else.

So what can you do with DPF Zero Trust Mode?

With DPU Zero Trust Mode, you can now achieve AWS Nitro-style zero trust isolation using the bare metal servers in your own data center! There are several main features available in this mode:

- VPC networking: the ability to define your own Virtual Private Cloud (VPC) networks and connect servers through a virtual fabric delivered by the DPUs. This is powered by the OVN VPC plugin.

- NVIDIA Argus: Workload Threat Detection in AI workloads and microservices, using a BlueField DPU to perform live machine introspection at the hardware level. This approach analyzes specific snippets of volatile memory to provide real-time visibility into container activity and behavior at the network, host, and application levels.

- HBN networking: this allows NVIDIA Host Based Networking (HBN) to be used as a BGP Layer 3 routed network between all DPUs, providing highly scalable and performant underlay networking.

- SNAP-based storage: the SNAP plugin provides the ability to mount remote storage over any protocol and deliver it to the host as a VirtIO-based storage device. Since Linux has drivers for VirtIO storage devices as standard, it can use these devices immediately. You can even have the boot device coming from SNAP and run the bare metal servers without any local storage at all.

- NVIDIA Firefly: for highly precise time synchronization based on PTP4L.

Bringing ZT to life

So the use cases that DPF Zero Trust Mode enables are pretty cool. But how can you put it into practice? Taking another look at the diagram above, several questions might come to mind:

- Where does the DPF Control Plane (on the left of the diagram) run, if the tenant machines are untrusted?

- How are the DPUs getting configured, if configuration can’t happen through the host OS?

- What’s that yellow box called “Data-Center Management System” doing there?

All very good questions — and another example of how DPU Zero Trust Mode is very different from Host-Trusted Mode.

In Host-Trusted Mode, every Kubernetes cluster will run its own DPF Control Plane inside it, so everything is self-contained. In Zero Trust Mode, we don’t involve the host machines at all. Instead there is a single external DPF Control Plane (that you will need 3 VMs or small servers for) that is going to control all the DPUs in all servers.

The DPUs are controlled via their 1GbE OOB BMC ports (while in Host-Trusted Mode, this port goes unused). The DPF Control Plane has discovery capabilities to find all the DPUs in the OOB subnet and bring them under management. Firmware flashing and DPU configuration all happens through this BMC port. This is the main aspect of the zero-trust nature of the solution: DPUs are configured through an OOB network and the tenant server has no way to access either the DPU, the DPU BMC or the DPF Control Plane.

To activate the features available to Zero Trust Mode, we need to deploy the plugins we’re interested in, for example OVN VPC and DOCA Argus. This is done in the form of DPF custom resources, as described here.

Some of these plugins require configuration in real time as tenant servers get provisioned, a prime example of that being the OVN VPC service. Whenever a customer deploys one or more bare metal servers to a VPC (or deploys a whole Kubernetes cluster consisting of bare metal servers), some extra steps need to happen:

- The customer needs to be asked which VPC to deploy these servers into (or let the customer create a new VPC).

- The customer needs to be asked which virtual network inside the VPC to deploy these servers into (or let the customer create a new virtual network).

- The DPUs that are part of the selected bare metal servers for provisioning, will need to be reconfigured to provide the host machine access to the selected VPC and virtual network.

In case of a Kubernetes cluster with auto-scaling, all these things need to happen dynamically as servers are added and removed from the cluster, so it’s not a one-time activity.

Directing the DPF control plane and realizing the vision of a bare metal cloud

And this is where we come back to the diagram, and the yellow box named “Data-Center Management System”. The functions listed above need to be handled by some additional platform, because DPF does not provide those capabilities itself.

At Spectro Cloud, we’re working to implement the DPF Zero Trust management capabilities into our Palette platform. Unlike other management platforms, we are very comfortable in the world of bare metal, and in many ways these new capabilities are a natural extension of the work we already do to support the deployment of bare metal Kubernetes clusters from scratch through our Cluster API provider for Canonical MAAS.

If you’re not familiar with MAAS, it stands for Metal As A Service. MAAS is a bare metal OS installation platform that uses PXE boot to turn racks of bare metal servers into dynamic resource pools of resources. It’s a bare cloud of capacity. All it needed was dynamic VPC networking to turn it into a full bare metal cloud, so it makes total sense for us to add this capability.

Our Cluster API provider for MAAS will trigger additional interactions with the DPF Control Plane to reconfigure DPUs as bare metal Kubernetes clusters get deployed. Since our MAAS Cluster API provider is responsible for adding and deleting nodes to the bare metal Kubernetes, it can trigger the adjustment of DPU configurations as servers get added to and removed from the cluster, dynamically.

Remembering that this is a zero trust solution, we can’t of course do this directly from the MAAS provider. Instead the MAAS provider would inform Palette of a server addition/removal event. Palette can then determine which bare metal servers are affected and it will interact with the DPF Control Plane to adjust the DPU configurations accordingly.

Next steps

To learn more about how we’re working with NVIDIA to bring this and other use cases to life, come meet us at NVIDIA GTC in Washington DC, or at KubeCon AI Day in Atlanta.

In the meantime, you can check out more about how we use Canonical MAAS for bare metal provisioning, or read more about our solutions for NVIDIA DPUs and GPUs.